A brief history of Machine learning

A crucial instrument for achieving the objective of utilizing artificial intelligence-related innovations is machine learning (ML). Machine learning is sometimes confused with artificial intelligence (AI) due to its capacity for learning and making decisions, but it is actually a subset of AI. That has been a component of the development of AI until the late 1970s. After that, it split off to develop independently. Machine learning is being utilized in many cutting-edge innovations and has grown to be a critical response tool for cloud computing and e-commerce. A brief history of machine learning and its application for handling information is given here.

For numerous companies today, machine learning is an essential component of modern business and research. It helps computer systems operate better over time by using neural network models and techniques. Despite having expressly designed to make choices, machine learning algorithms use sample information, sometimes referred to as “training data,” to autonomously construct a mathematical model.

Machine learning is based on a model of brain cell interaction in part. Donald Hebb developed the paradigm in 1949 in his book “The Organization of Behavior.” Hebb’s theories on neuron excitement and neuron communication are presented in the book.

Hebb Concept

Hebb put it this way: “When one cell repeatedly assists in firing another, the axon of the first cell develops synaptic knobs (or enlarges them if they already exist) in contact with the soma of the second cell.” When Hebb’s thoughts are translated to artificial neural networks and artificial neurons, his model may be stated as a method of changing the interactions among artificial neurons (also known as nodes) and the modifications for each individual neuron. The link between two neurons/nodes strengthens when they are triggered simultaneously and weakens when they are triggered independently. The term “weight” is used to describe these interactions, and nodes/neurons with significant positive weights are those that are both positive and negative. Nodes with opposite weights acquire significant negative weights (for example, 1×1=1, -1x-1=1, -1×1=-1).

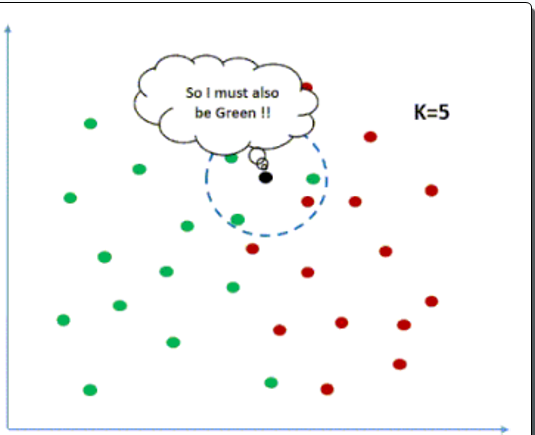

The nearest neighbor method was developed in 1967, marking the starting point of fundamental pattern recognition. This technique has been employed for route modeling and was one of the first methods used to solve the problem of identifying the most efficient path for visiting salespeople. Through it, a salesman enters a desired city and instructs the program to visit the neighboring cities unless all are visited. Marcello Pelillo is credited with creating the “nearest neighbor rule.” He, in turn, credits the famous 1967 Cover and Hart paper. K-Nearest Neighbor classification is one of the examples co-relevant with this algorithm which resulted with green neighbors because most of the colors are green around it.

Multilayers

The discovery and application of multilayers in neural network studies established the way in the 1960s. It was determined that giving and employing two or more layers in the perceptron provided much more computational capacity than a single layer perceptron. After the perceptron enabled “layers” in systems, other forms of neural networks were developed, and the variety of neural networks continues to grow. Feedforward neural networks and backpropagation were created as a result of the utilization of numerous layers.

Backpropagation, which was invented in the 1970s, allows a network to adjust to new circumstances by adjusting its hidden layers of neurons/nodes. It defines “the backward propagation of errors,” in which a failure is handled at the output and then sent backward through the network’s layers for retraining.

Hidden layers in an artificial neural network (ANN) allow it to respond to more complex tasks than early perceptrons could. ANNs are a primary ML technology. Neural networks employ all input and output layers, as well as a hidden layer (or layers) meant to turn input into data usable by the output layer. The hidden layers are ideal for detecting sequences that are too complicated for an individual programmer to notice, which means that a person cannot find the pattern and then train the device to identify it.

Machine Learning vs Artificial Intelligence

Artificial intelligence development transitioned from sensible, knowledge-based techniques to algorithms and deserted neural network research in the late 1970s and early 1980s, resulting in a rift between AI and machine learning. For almost a decade, the machine learning sector, that consisted of scholars and technologists, fought for moving its focus from AI training to real-world problem-solving techniques because of internet growth and the accessibility of digital information, the ML sector, which concentrated on neural networks, developed in the 1990s.

Boosting

Boosting is an important advancement in ML that transforms poor learners into powerful ones. The term was originally used in Robert Schapire’s 1990 paper “The Strength of Weak Learnability.” The majority of boosting techniques are made up of repetitive learning poor classifiers that are evaluated to determine their precision. The method utilized to weight training data points defines the learner’s strength. Many popular methods work under the AnyBoost framework, including AdaBoost, BrownBoost, LPBoost, MadaBoost, TotalBoost, xgboost, and LogitBoost.

Speech Recognition

Long short-term memory (LSTM), a neural network model proposed by Jürgen Schmidhuber and Sepp Hochreiter in 1997, is currently used for much of the voice recognition training. LSTM can train challenges requiring recollection occurrences that occurred thousands of discrete steps earlier, which is critical for speech recognition. LSTM began outperforming more typical voice recognition systems around 2007. Using a CTC-trained LSTM, the Google voice recognition system apparently improved by 49 percent in 2015.

How Facial Recognition Becomes a reality?

The Facial Recognition Grand Competition, an initiative of the National Institute of Standards and Technology, evaluated famous facial recognition algorithms in 2006. Three-dimensional face scans, iris images, and high-resolution pictures of faces were all tried. Their results indicated that the new methods were ten times more precise than the 2002 facial recognition methods and 100 times more accurate than the 1995 methods. Some of the computers outperformed human participants in recognizing faces and even distinguishing between identical twins.

Google’s X Lab created an ML system in 2012 that can search for and find films involving cats on its own. DeepFace, a Facebook technology designed for recognizing or validating individuals in images with the same reliability as humans developed in 2014.

Real-time Machine Learning

Machine learning is currently accountable for some of the most significant developments in technology. It is being employed in the growing sector of self-driving automobiles, as well as for galactic exploration, as it aids in the identification of exoplanets. Stanford University previously described machine learning as “the science of getting computers to act without being explicitly programmed.” Machine learning has spawned a variety of new ideas and innovations, such as supervised and unsupervised learning, new algorithms for robots, the Internet of Things, analytics tools, chatbots, and more.

Below are common applications of machine learning in the business world today:

- Processing Sales Data: Machine learning systems can evaluate sales data to find patterns and trends, allowing firms to make better educated recommendations regarding pricing, stock, and advertising tactics.

- Chatbots: Chatbots are software programs that can simulate conversations with individuals. By studying and learning from customer interactions, machine learning techniques can help chat bots become more precise and efficient as time passes.

- Client Relationship Administration: Machine learning techniques can assist firms in analyzing information about clients to detect patterns and trends, allowing them to enhance client service and engagement.

- System Optimization: ML algorithms can evaluate information and execute simulations to discover perfect or near-perfect approaches, or they can employ algorithms to recommend next best choices.

- Fraud Detection: Machine learning approaches can examine enormous volumes of information to find trends and anomalies that may suggest fraudulent behavior.

- Predictive maintenance: Techniques of machine learning can examine data from monitors and other sources to forecast when machinery is going to fail, enabling organizations to do repairs before an interruption happens.

- Suggested System: Machine learning algorithms can examine clients data to generate specific suggestions for goods and services, thereby assisting companies in increasing sales and improving client satisfaction.

Google is actively working with machine learning through a technique known as instructional fine-tuning. The purpose is to train an ML model to address broad natural language processing (NLP) problems. The method teaches the system to solve a variety of challenges rather than just one type of difficulty.

These are merely several applications of machine learning in business today. As technology advances, it is possible that we will observe more innovative uses in the coming years. Machine learning models have become highly flexible in their ongoing development, making them more precise the longer they run. Flexibility and effectiveness are increased when machine learning algorithms are integrated with new computer techniques. Machine learning, when combined with business analytics, has the potential to solve a wide range of organizational challenges. Modern ML models can forecast everything from epidemics of diseases to stock market fluctuations.