What is the neural network?

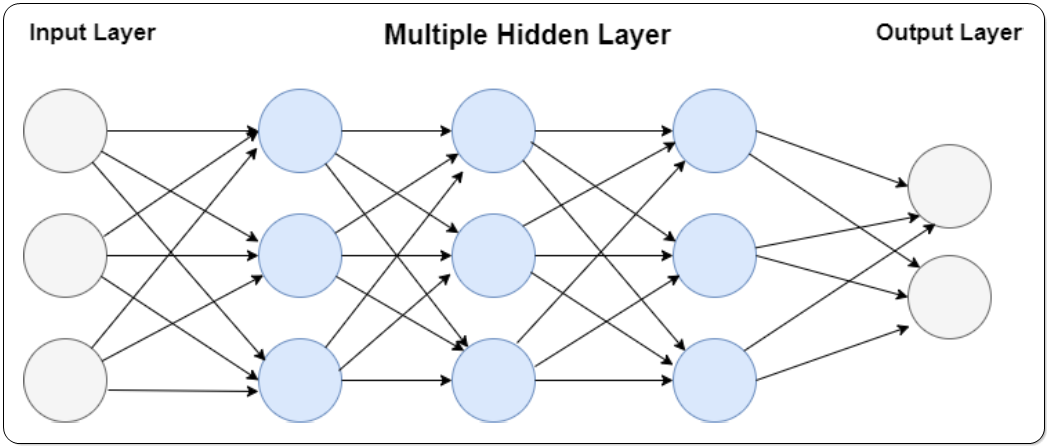

Neural networks, also known as artificial neural networks (ANNs) or simulated neural networks (SNNs), are a subset of machine learning (ML) that provide the foundation of deep learning techniques. Their forms and names have been influenced by the human brain’s structure, and they replicate how real neurons communicate with one another. Artificial neural networks (ANNs) are made up of node levels, each of which has an input layer, one or more hidden layers, and a layer of output. Each node, or artificial neuron, is linked with each other and has its own value and threshold. If the result of any particular node exceeds the given threshold value, that node is activated and begins transferring information to the network’s next tier. Otherwise, no data is sent to the following network layer.

Training data is used by neural networks to acquire knowledge and increase its precision over time. However, once these algorithms for learning have been fine-tuned for precision, they become formidable tools in computer science and AI, allowing us to categorize and cluster information at rapid speeds. While compared with manual identification by human specialists, tasks in speech recognition or recognizing pictures can take minutes rather than hours. Google’s search algorithm is one of the most well-known neural networks.

Types of Neural Network

There are several varieties of neural networks, each of which serves a particular function. While this is not an exhaustive list, the following are typical of the most frequent types of neural networks encountered for popular instances:

|

This has mostly focused on feedforward neural networks (or multi-layer perceptrons (MLPs). They consist of an input layer, a hidden layer or layers, and an output layer. While these neural networks are also known as MLPs, it’s crucial to keep in mind that they are made up of sigmoid neurons rather than perceptrons, as most real-world circumstances are nonlinear. These algorithms are generally fed information to develop them, and they serve as the basis for computer vision, natural language processing, and other neural networks. |

|

The input-output loops of recurrent neural networks (RNNs) distinguish them. These machine learning techniques are typically used for making forecasts about future occurrences using time-series information, such as stock market projections or sales forecasting. |

|

Convolutional neural networks (CNNs) are identical to feedforward networks, however they are typically used for image recognition, pattern identification, and/or computer vision. These neural networks use linear algebra concepts, notably matrix multiplication, to find trends in images. |

How are Neural networks and Deep Neural networks different?

Neural networks are a basic idea in machine learning that may be simple (shallow) or complicated (deep) depending on the number of hidden layers. Deep learning, in the other direction, is an area of machine learning that focuses on the creation and implementation of deep neural networks with several hidden layers. Deep learning has shown outstanding performance in handling complicated issues involving unstructured information, rendering it an essential component of current AI and machine learning systems.

Conclusion

The article introduced the actual Neural Networks in machine learning, explaining their many varieties to provide an overview and comprehension of Neural Networks. This foundation aids in understanding Neural Networks within the realm of machine learning by distinguishing MLP, RNN, and CNN individually.