WHAT IS EXPLAINABLE AI?

Explainable AI (XAI) is a set of approaches and tools for increasing transparency in machine learning methods by disclosing their underlying logic. This enables stakeholders to satisfy legal standards, particularly in sensitive industries such as banking and healthcare. XAI also helps improve and debug algorithms by detecting biases and fixing faults, resulting in more trustworthy AI systems. This openness promotes trust in AI outputs, resulting in widespread acceptance and use of AI technology across sectors.

Explainable AI is critical for organizations to understand and trust AI decision-making processes, since it assists people in comprehending machine learning methods, deep learning, and neural networks. AI models are sometimes referred to as black boxes, and biases can be problematic when training models. Methods are continuously monitored and managed, which promotes AI clearness and business effect evaluation. Explainable AI also increases end user confidence, model auditability, and productive AI use while reducing reputational threats.

Responsible AI is an approach for large-scale adoption of AI technologies in real-world organizations that prioritizes justice, model explain ability, and responsibility. To embrace AI ethically, organizations must include ethical concepts into AI applications and procedures, resulting in AI systems built on trust and transparency. This guarantees that AI systems are founded on confidence and openness, hence encouraging responsible AI deployment.

Difference between AI and Explainable AI(XAI)

| Aspect | Artificial Intelligence | Explainable AI (XAI) |

| Transparent | Works as a “black box,” with no comprehension or transparency. | Uses unique strategies to improve transparency and comprehension. |

| Understanding | AI architects frequently have a limited understanding of how algorithms arrive at their conclusions. | Provides ways for understanding the logic underlying forecasts and judgements. |

| Regulation compliance | It is difficult to satisfy regulatory criteria owing to a lack of explain ability. | Provides interpretable outputs in order to guarantee compliance with laws and regulations. |

| User Security | Users may distrust AI because of its “black box” character. | Improves trust among consumers by making AI judgements more clear and justified. |

| Moral issues | This raises issues regarding the ethical use of AI, particularly in sensitive applications. | Aims to resolve ethical concerns by providing transparency in decision-making processes. |

| Bias and Equality | It is difficult to uncover and overcome innate prejudices. | Allows the discovery and reduction of prejudice, resulting in more equitable outcomes. |

| Debugging and error-checking | The opaqueness of algorithms makes it difficult to discover causes of inaccuracy. | Allows for simpler debugging by tracing and explaining decision paths. |

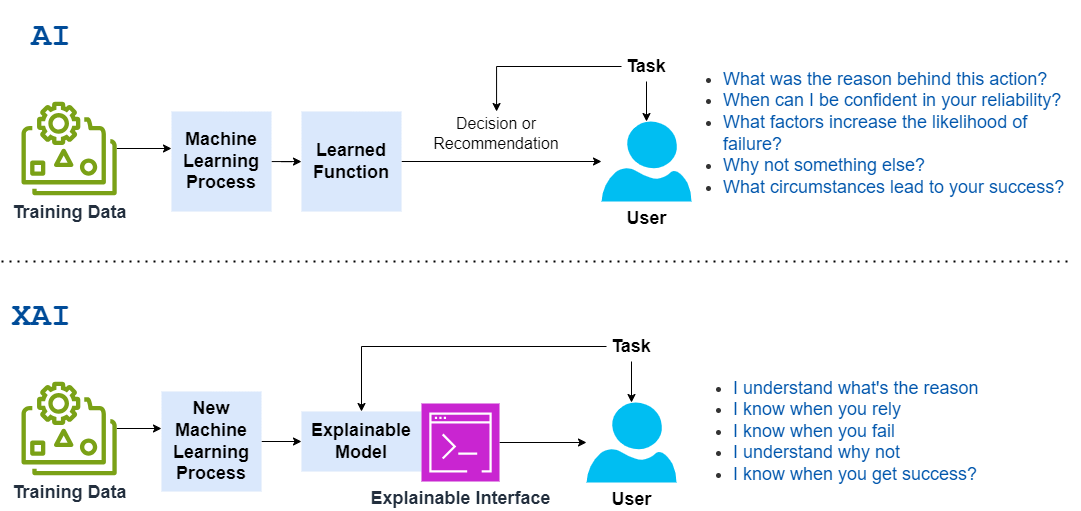

Explainable AI (XAI) and standard AI models have different levels of openness and accountability. Traditional AI models, such as deep learning and neural networks, are sometimes referred to as “black boxes” owing to their lack of transparency into how they get certain results. This can be difficult for users in crucial environments such as medical, financial, and legal systems. XAI prioritizes explain ability and comprehension, offering means for explaining the logic behind AI-generated results. This technique enables users to understand the “why” and “how” of AI choices, which improves trust, auditability, and compliance with regulations. While traditional AI focuses on high performance and accuracy, XAI combines these with transparency, allowing people to understand and assess AI-based results.

How Explainable AI works?

Explainable AI (XAI) uses a range of strategies to make AI models’ decision-making procedures more visible and accessible to individuals, particularly complicated ones such as deep learning and neural networks. Here’s how it typically operates:

Component significance: XAI detects major characteristics or input variables that influence the model’s results.

Regional Interpretability: Methods such as Regional Interpretable Model-agnostic Explanations (LIME) and Shapley Additive Explanations (SHAP) reduce models in order to explain particular forecasts.

Method Visualization: Heatmaps, decision trees, and activation maps show how diverse variables and characteristics impact outputs.

Decision Principles: Some XAI systems produce human-readable rules from AI models, which explain the reasoning behind a specific conclusion.

Virtual Systems: Simpler, interpretable models that mimic the behaviors of more complicated models.

Counterfactual Analysis: It investigates what modifications in input might result in distinct results.

Case-based Justifications: Look at similar situations or examples utilized by the algorithm to create a forecast.

Transparent by Design: Some AI platforms are created with comprehensibility in mind, that allows for simpler explanation and comprehension.

Overall, explainable AI helps to maintain transparency, regulatory compliance, and confidence in AI systems by opening up the “black box” of complicated algorithms. It fills the gap between powerful machine learning and humans’ need to comprehend the “why” behind AI-driven results.

Limitations

XAI research has major drawbacks, including a lack of agreement on fundamental criteria such as explainable AI and interpretability. These terminologies differ between publications and situations, with some using them indiscriminately and others defining them differently. To establish a common vocabulary for defining and investigating XAI subjects, domain-specific terminologies must be improved and defined.

Real-world help on selecting, developing, and evaluating explanations for XAI approaches is limited. Explanations have been found to boost knowledge of ML systems for a wide range of audiences, but their potential to foster confidence among non-AI specialists is debatable. Research is being conducted on how to use explanations to establish confidence among non-AI professionals, with dynamic explanations demonstrating potential.

The argument about explain ability in AI systems is continuing, with some advocating for simpler, easier-to-understand models and others believing that opaque models are fine as long as they are extensively verified for accuracy and dependability, especially in sensitive areas such as healthcare. According to research, providing technical data about AI is insufficient; instead, concentrating on social transparency, taking into account human values, ethics, and trust, is critical for ensuring AI systems perform properly and meet society expectations. This larger perspective guarantees that AI systems operate efficiently and satisfy social expectations.