WHAT IS GENERATIVE AI?

Generative artificial intelligence (AI) relates with the use of AI technology for the purpose of generating novel forms of material, encompassing text, pictures, music, audio and video data.

Generative AI depends on foundation models, which are huge AI models capable of multitasking and doing unconventional tasks such as summarization, question and answer, categorization, and others. In addition, foundation models may be easily customized for particular scenarios with minimum training and a scarcity of sample data.

How Generative AI works?

The methodology of generative AI starts with a cue, which may take the form of text, picture, video, layout, musical notes, or any other input that can be processed by the AI system. Subsequently, diverse AI algorithms generate updated information in reaction to the given input. The content may encompass essays, problem-solving answers, or authentic fabricated representations derived from images or audio of an individual.

Initial iterations of generative AI needed the submission of data through an API or a more intricate procedure. The developers were required to acquaint themselves with specialized tools and compose apps utilizing programming languages like Python.

Currently, innovators in generative AI are creating enhanced user interfaces that enable users to express their requests using simple and straightforward language. Following an initial reaction, it is possible to further modify the outcomes by providing comments regarding the desired style, tone, and other qualities that you like the generated material to portray.

Generative AI examples

Generated artificial intelligence (AI) technologies are available for a range of modalities, including text, picture, music, code, and voices. Here are several well-known AI content producers to consider:

- GPT, Jasper, AI-Writer, and Lex are examples of text generating tools.

- Dall-E 2, Midjourney, and Stable Diffusion are among the image generating technologies available.

- Amper, Dadabots, and MuseNet are among the music generating technologies available.

- Some examples of code generating tools are CodeStarter, Codex, GitHub Copilot, and Tabnine.

- Descript, Listnr, and Podcast.ai are examples of voice synthesis tools.

- Prominent businesses in the field of AI chip design tools encompass Synopsys, Cadence, Google, and Nvidia.

Generative AI vs AI

| Aspects | Generative AI | AI |

| Primary Concern | Production of novel and innovative material, including chat answers, designs, artificially generated information, and deep fakes | Utilizes Neural Network such convolutional neural network, recurrent neural network and reinforcement learning models |

| Methodologies | Transformers, Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) | convolutional neural networks, recurrent neural networks, and reinforcement learning models. |

| Initiation Process | Provision of a prompt, wherein a user or data source is able to submit an initial inquiry or data set to direct the process of content development | It often adheres to a predetermined set of rules in order to analyze input and provide outcomes. |

| Suitability for Task | Generative AI is particularly suitable for tasks that involve natural language processing (NLP) and necessitate the generation of novel content | More efficacious for tasks that entail rule-based analyzing and predetermined results |

Generative artificial intelligence (AI) has superior performance in the domains of natural language processing and creative content production, whereas other AI approaches depend on pre-established rules and structured processing to provide unambiguous outputs and make decisions based on rules. The selection of these methodologies should be predicated upon variables such as the degree of innovation, intricacy, and limitations in resources. By utilizing the advantages of each technique in combination with the requirements of the issue, we may get the most efficient solution.

Generative AI models

Currently, there are two predominant generative AI models that are extensively utilized, and we will thoroughly examine each of them.

Generative Adversarial Networks (GANs) are advanced technologies capable of generating visual and multimedia artifacts using input data in the form of images and text.

A generative adversarial network (GAN) is a machine learning approach that involves the opposition between two neural networks, namely the generator and the discriminator, which gives rise to the term “adversarial” in its name. The competition between two neural networks is structured as a zero-sum game, wherein the gain of one agent corresponds to the loss of the other agent.

Jan Goodfellow and his colleagues at the University of Montreal introduced Generative Adversarial Networks (GANs) in 2014. The GAN architecture was elucidated in the scholarly article entitled “Generative Adversarial Networks.” Subsequently, extensive research and practical implementations have propelled GANs to become the preeminent generative AI model.

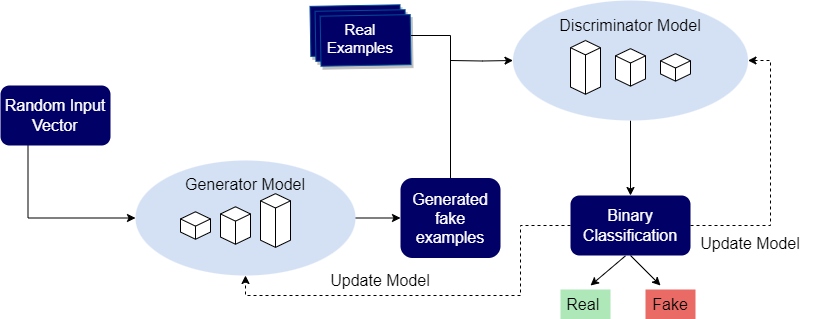

Figure 1: GAN Architecture

Generative Adversarial Networks (GANs) have two components: a generator, responsible for generating fabricated input from an unknown input vector, and a discriminator, which identifies whether a given instance is a generator-generated false instance or an actual instance from the domain/input. The discriminator is a binary classifier that assigns probabilities ranging from 0 to 1. Approximately equal results indicate a greater probability of the output being fake, also these results indicate a greater probability of the prediction being real. Convolutional Neural Networks (CNNs) are frequently employed for the implementation of both generators and discriminators, notably in the context of image processing.

The adversarial aspect of GANs is derived from a game theoretic situation in which the generator network competes against the discriminator network. The discriminator network aims to differentiate between samples from the training data and samples from the generator. The winner has been revised, whilst the rival has remained unaltered. GANs are deemed effective when a generator generates a persuasive fabricated sample that is capable of deceiving both discriminators and human observers. However, the game continues as it is necessary to update and enhance the discriminator.

The use of Transformer-based models, specifically Generative Pre-Trained (GPT) language models, enables the generation of textual material from various sources such as online articles, announcements, and whitepapers by leveraging information collected from the Internet.

Transformers are deep neural networks that acquire both meaning and context by tracing correlations in sequential input, which makes them suitable for Natural Language Processing (NLP) applications.

Transformers include GPT-3, a set of deep learning language models built by the OpenAI group that can generate text that looks like human written form, such as poems, correspondence, and jokes. LaMDA, a series of conversational neural language models based on Google Transformer, is a semi-supervised learning method for transforming one sequence into others.

Transformers are pre-trained unsupervised on a huge unlabeled dataset and then fine-tuned by supervised training to improve performance.

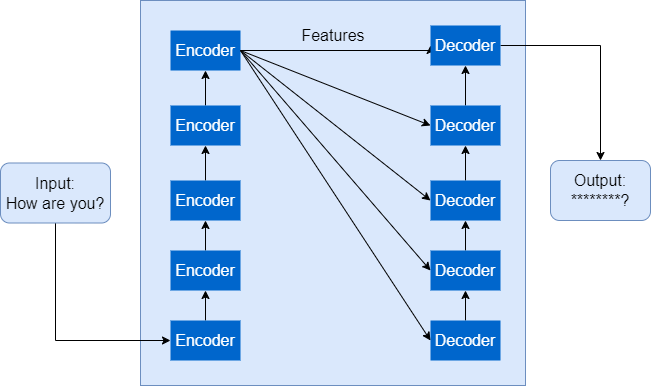

Figure 2: Encoder-Decoder Model

A transformer is a machine learning system made up of two components: an encoder and a decoder. The encoder collects features from an input sequence, turns them to vectors, and then sends them to the decoder. The decoder decodes the desired output series and generates the output series. Both components are made up of numerous encoder blocks, with the output from one providing the input for another. Transformers employ sequence-to-sequence learning to anticipate the next phrase in the output sequence using iterative encoder levels. They employ attention mechanisms to discern tiny patterns in how data pieces in a series impact and depend on one another.

Transformers seek to find the context that provides meaning to each word in the sequence. They may execute numerous sequences simultaneously, which speeds up the training process.

Challenge

The control of generative AI, characterized by its capacity for self-learning, might provide challenges due to the unexpected nature of its outputs. Although we have the ability to manipulate AI, it is essential to face hurdles in order for technology to advance and expand. Responsible artificial intelligence (AI) has the potential to mitigate or diminish the limitations associated with advancements such as generative AI, hence assuring the continued progress and expansion of technology. Therefore, it is imperative that we acknowledge the possible obstacles and adjust our approach accordingly.